This is a request for best practices or perhaps, ultimately, a feature request…

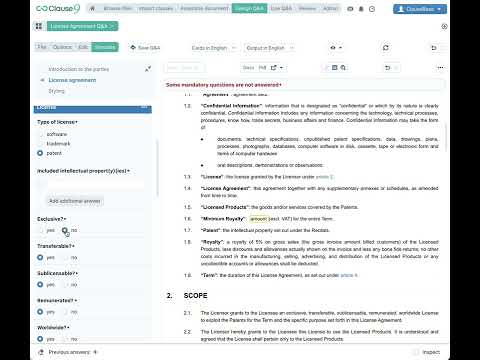

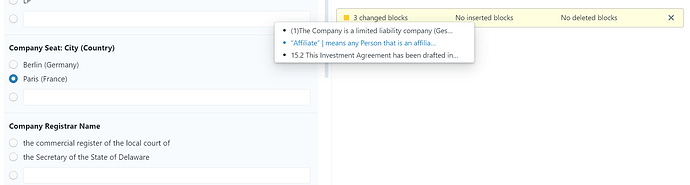

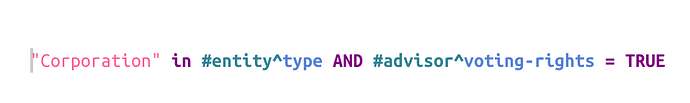

Clausebase is remarkably powerful and of course, as a result, complicated to use. The complexity of coding introduces plenty of opportunities for errors and thus, the need for a really good “QA” (quality assurance) process. But QA’ing templates is very challenging because you only see the text that generates for the particular set of conditions one selects (i.e., when answering questions in the Q&A). To repeatedly go back to the Q&A and regenerate different versions of the document with all the possible permutations of datafield choices would be pretty much impossible. But somehow, one has to see all the different generated versions of the document in order to see that the language renders correctly, without typos, coding errors, or other issues.

Are there any best practices to do this that I might not be aware of?

Without the ability to QA all the document possibilities in an efficient way, there is no way to be confident that all versions of a document can be generated without errors. To put it simply: we are taking a lot of time QA’ing our documents and we are still uncovering errors in our work. It’s proving very difficult to find all the issue during the QA / testing process, even as that process takes us a long time.

To take a simple example, an equity agreement might have a single-trigger acceleration provision and a double-trigger provision. One needs to review both. Sure, one could change the Q&A and regenerate but that’s time-consuming. Now multiply that by all of the different clause and datafield possibilities.

What might work better is if Clausebase had some kind of QA mode that outputs all the possible different clauses that could be generated in a document.

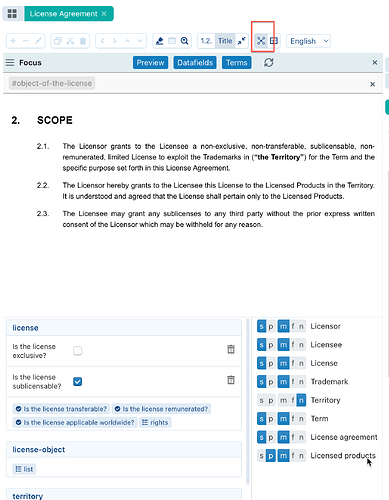

It’s somewhat the same issue for datafields. I’d want to see that each choice for a datafield, to ensure that the choices are coded correctly. So, to take the above idea further, the generated document in my proposed QA mode could have a placeholder for each datafield and show (e.g., in a footnote) each of the text options that could be generated for that field.

I’m not sure if there’s a complete and total way to do what I am suggesting, but I think in order for us to be able to create templates efficiently, we need better QA tools than we currently have. As things stand now, we are struggling to arrive at templates that we feel totally confident about. We eventually get there, but I wouldn’t say that we do so in a time-efficient manner. And of course, time is a precious commodity in our business.